GAMER FrameWork

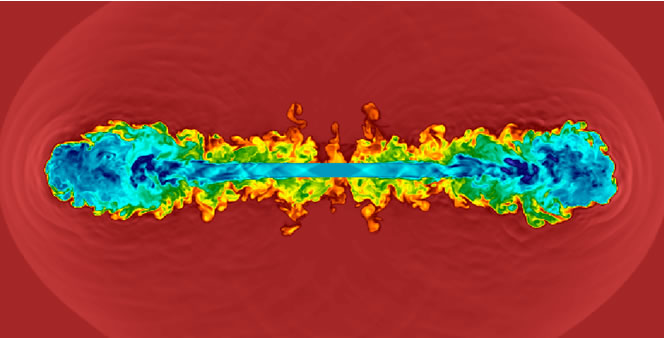

GAMER is a GPU-accelerated Adaptive MEsh Refinement Code for astrophysical applications. Currently the code solves the hydrodynamics with self-gravity. By taking advantage of the extraordinary performance of GPUs, up to two orders of magnitude performance speed-up has been demonstrated in comparison to the CPU-only performance.

Currently the code supports the following features:

- Adaptive mesh refinement (AMR)

- Hydrodynamics with self-gravity

- A variety of GPU-accelerated hydrodynamic and Poisson solvers

- Hybrid OpenMP/MPI/GPU parallelization

- Concurrent CPU/GPU execution for performance optimization

- Hilbert space-filling curve for load balance

GAMER is mainly developed by Hsi-Yu Schive, Yu-Chih Tsai, and Prof. Tzihong Chiueh at the Computational Astrophysics Laboratory (CALab) at National Taiwan University. The code has been well tested on the GPU cluster Dirac at the National Energy Research Scientific Computing Center at Lawrence Berkeley National Laboratory (NERSC/LBNL), with tight collaboration with Hemant Shukla at the International Center for Computing Science (ICCS).

Code Description

- AMR

The key idea of adaptive mesh refinement (AMR) is to locally and adaptively increase the simulation resolution around the high-density and/or high-gradient regions, so that the limited computation resource can be fully exploited. It is especially important for the applications requiring high dynamic range. The AMR implementation in GAMER is summarize as follows.- The computational domain is covered by a hierarchy of grid blocks. Every block has the same number of cells (e.g., 83 cells per block), but can have different spatial resolution accordingly to the user-defined refinement criteria.

- Octree data structure.

- Individual time-stepintegration. Blocks at different refinement levels may have different evolution time-steps.

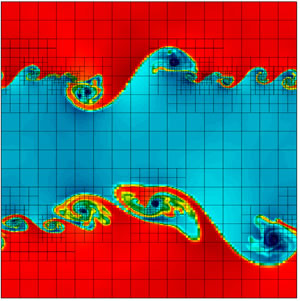

- Figure 1 shows an example of using GAMER to simulate the Kelvin-Helmholtz instability, in which fine grids are allocated at the regions with high vorticity.

Figure 1: 2D domain refinement for simulating the Kelvin-Helmholtz instability with GAMER. Each grid represents a block with 82 cells.

- Numerical Schemes

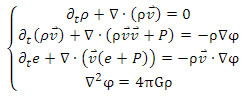

- Currently GAMER solves the hydrodynamics with self-gravity. The governing equations are the Euler's equations and the Poisson equation:

where

is mass density,

is mass density,  is flow velocity,

is flow velocity,  is pressure,

is pressure,  is energy density, and

is energy density, and  is gravitational potential.

is gravitational potential. - Support a variety of shock-capture hydrodynamic schemes, including the relaxing total variation diminishing scheme (RTVD), weighted average flux scheme (WAF), MUSCL-Hancock scheme, and corner transport upwind scheme (CTU).

- Support different Riemann solvers, including the exact, HLLC, HLLE, and Roe's solvers.

- Support both piecewise linear method (PLM) and piecewise parabolic method (PPM) for spatial reconstruction and a variety of slope limiters.

- Root-level Poisson solver: FFT.

- Refinement-level Poisson solvers: successive overrelaxation method (SOR) or multigrid.

- Currently GAMER solves the hydrodynamics with self-gravity. The governing equations are the Euler's equations and the Poisson equation:

- GPU Implementation

In GAMER, both the hydrodynamic and Poisson solvers have been implemented into GPU. The multi-GPU implementation is inspired by the three parallelism levels intrinsically embedded in the block-based AMR scheme:- The entire simulation domain can be decomposed into many sub-domains ↔ Use different GPUs to calculate different sub-domains.

- Each sub-domain is covered by many grid blocks ↔ Use different CUDA thread blocks to work on different grid blocks.

- Each grid block is composed of many cells (83 for example) ↔ Use different CUDA threads within the same thread block to compute the solution of different cells within the same grid block.

Performance Optimization

- Asynchronous Memory Copy

The data transfer time between the host (CPU) and device (GPU) memory is found to take about 30% of the total GPU execution time in both GPU hydro and Poisson solvers. To improve the performance, this data transfer is performed concurrently with the kernel execution by managing the CUDA stream. - Concurrent CPU/GPU Execution

A hybrid CPU/GPU model is adopted, in which the time-consuming PDE solvers are implemented into GPUs and the complicated AMR data structure is manipulated by CPUs. In addition, CPUs and GPUs are allowed to work in parallel in order to further optimize the performance.

Hybrid OpenMP/MPI

A hybrid OpenMP/MPI model is adopted in GAMER in order to fully exploit the computational power in a heterogeneous CPU+GPU cluster. Each MPI process is responsible for one GPU, and the CPU computation allocated to each MPI process is further parallelized with OpenMP. For example, in a GPU cluster with Nnode nodes and each of which is equipped with Ncore CPU cores and NGPU GPUs, we can run the simulation with Nnode*NGPU MPI processes and launch Ncore/NGPU OpenMP threads in each MPI process. - Load Balance

The Hilbert space-filling curve is adopted to improve the load balance among different GPUs. Note that for the individual time-step integration, calculations at different levels must be performed sequentially. Therefore, in GAMER the load balance is achieved at each refinement level by drawing different space-filling curves at different levels independently.

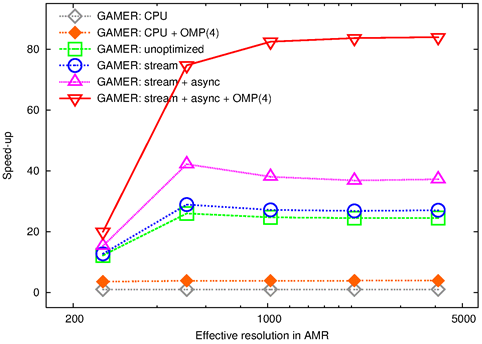

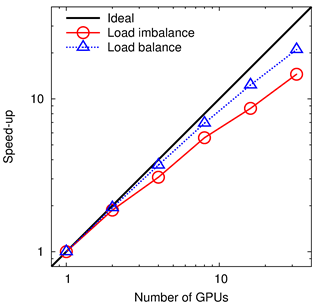

Figure 2 shows the overall performance speed-up with a single GPU and different optimization levels, in which the performance with a single CPU core is regarded as the reference performance. Figure 3 shows the strong scaling in the multi-GPU tests, in which both the load-imbalance (rectangular domain decomposition) and load-balance (space-filling curve) results are shown for comparison.

Figure 2: Overall performance speed-up using a single GPU. The filled and open diamonds show the CPU-only results with and without OpenMP, respectively. The unoptimized GPU performance is shown by the open squares. The open circles, triangles, and inverted triangles show the GPU performances, in which different optimizations are implemented successively. The abbreviation `async' represents the optimization of the concurrent execution between CPU and GPU, and `stream' indicates the overlapping between memory copy and kernel execution. Four threads are used when OpenMP is enabled. In all data points, the performance of GAMER using a single CPU core is regarded as the reference performance.

Figure 3: Strong scaling of GAMER. The open circles and triangles show the results with rectangular domain decomposition (load imbalance) and Hilbert space-filling curve method (load balance), respectively. The ideal scaling is also shown for comparison (thick line).

Extension

GAMER serves as a general-purpose AMR + GPU framework. Although the code is designed for simulating galaxy formation in the first place, it can be easily modified to solve a variety of applications with different governing equations. All optimization strategies implemented in the code can be inherited straightforwardly.

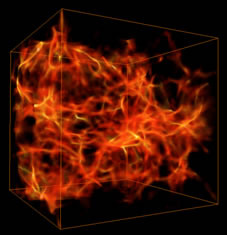

Scalar Field Dark Matter

Figure 4: Scalar field dark matter

As a good example, the code has been modified to simulate the Bose-Einstein condensate dark matter model (Figure 4) known as the scalar field dark matter (SFDM) or extremely light bosonic dark matter (ELBDM), which serves as an alternative to the standard cold dark matter (CDM) model. This model was anticipated to be able, on one hand, to eliminate the sub-galactic halos to solve the problem of over-abundance of dwarf galaxies produced in CDM, and on the other, to produce halo cores in galaxies suggested by some observations. In addition, due to the quantum pressure naturally resided in the ELBDM model, it may also account for the high concentration parameter in massive clusters as reported by recent lensing surveys.

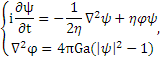

The governing equation of non-relativistic scalar field is the Schrödinger-Poisson equation in the comoving frame:

where![]() is wave function,

is wave function, ![]() is particle mass,

is particle mass, ![]() is gravitational potential, and

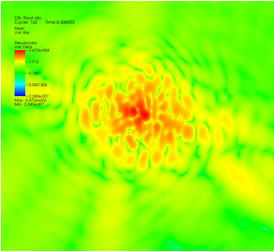

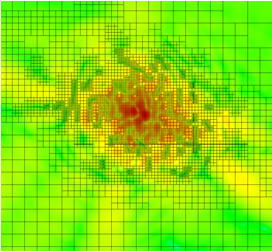

is gravitational potential, and ![]() is scale factor. We have implemented the ELBDM model into GAMER with full support of GPUs and AMR. Extremely high-resolution simulation with dynamic range from 100 Mpc to 0.8 kpc is being tested. Figure 5 shows the density distribution of a dark matter halo in the ELBDM simulation, and Figure 6 shows the underlying grid refinement.

is scale factor. We have implemented the ELBDM model into GAMER with full support of GPUs and AMR. Extremely high-resolution simulation with dynamic range from 100 Mpc to 0.8 kpc is being tested. Figure 5 shows the density distribution of a dark matter halo in the ELBDM simulation, and Figure 6 shows the underlying grid refinement.

Figure 5: Dark matter halo in the ELBDM simulation

Figure 6: Grid distribution corresponds to Figure 5

DOWNLOAD CODE »

Future Prospects

- Magnetohydrodynamics (MHD)

- Dark matter particles

- A variety of boundary conditions

- External gravity

- Radiation transfer

- Chemical network

- A variety of baryonic physics, including radiation heating, cooling, star-formation, star-formation feedback, supernovae feedback, AGN feedback, …

References

- GAMER: a GPU-accelerated Adaptive-Mesh-Refinement Code for Astrophysics, Schive, H., Tsai, Y., & Chiueh, T. 2010, ApJS, 186, 457 (arXiv:0907.3390)

- GAMER with Out-of-core Computation, Schive, H., Tsai, Y., & Chiueh, T. 2011, Proceedings of IAU Symposium 270, 6, 401 (arXiv:1007.3818)

- Directionally Unsplit Hydrodynamic Schemes with Hybrid MPI/OpenMP/GPU Parallelization in AMR, Schive, H., Zhang, U., & Chiueh, T. 2011, IJHPCA, accepted for publication, DOI: 10.1177/1094342011428146(arXiv:1103.3373)

- Multi-science Applications with Single Codebase - GAMER - for Massively Parallel Architectures, Shukla, H., Schive, H., Woo, T., & Chiueh, T. 2011, SC '11 Proceedings of 2011 International Conference for High Performance Computing, Networking, Storage and Analysis, DOI:10.1145/2063384.2063433